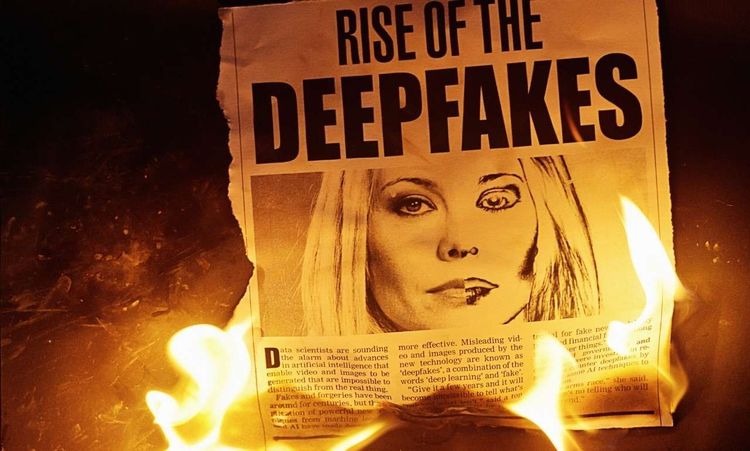

Something strange happened to me last week. I watched a video of a celebrity giving a speech, shared it with friends, only to learn hours later it was completely fake. This isn't just my problem anymore—it's everyone's. Deepfakes have crashed the party of digital content like uninvited guests who won't leave. They're reshaping how we think about truth in media. Every image, every video clip now carries a question mark. Can we trust what we see? News outlets scramble to verify content that looks perfectly real. Social media users hesitate before hitting "share." The old rules of media consumption don't work when computers can put words in anyone's mouth or actions in anyone's hands. This isn't some distant future scenario. It's happening right now, changing everything about how we create, consume, and think about digital content.

Erosion of Public Trust

The Cracks Are Showing

Trust used to be simpler. You saw Walter Cronkite on TV, you believed him. Today's media landscape operates under different rules entirely. Audiences approach every piece of content with detective-level suspicion. Recent polling shows something alarming: most people now doubt digital content before they believe it. That's a complete reversal from just five years ago. News organizations that built their reputations over decades find themselves proving their credibility daily. The effects ripple outward like stones thrown into still water. Advertising loses impact when consumers question every visual. Educational content faces skepticism from students who've learned to distrust their own eyes. Even family photos shared on social platforms trigger authentication debates.

Rebuilding What's Been Lost

Smart media companies aren't just complaining about the problem—they're solving it. They're implementing new verification systems that work like digital fingerprints. Each piece of content gets tagged with information about its creation, editing, and distribution. But here's the catch: technical solutions only work when people understand them. Most consumers can't read cryptographic signatures or interpret metadata. The industry needs to translate complex authentication into simple trust signals that regular people can use. Some outlets now include "authenticity scores" with their visual content. Others provide detailed sourcing information that reads like a recipe for verification. These approaches help, but they require audiences to change their media consumption habits fundamentally.

Spread of Misinformation and Disinformation

The Perfect Storm for False Information

Remember when creating convincing fake videos required Hollywood-level resources? Those days are gone. Today, anyone with a laptop and internet connection can produce content that looks professionally made. The democratization of deceptive technology creates problems we've never faced before. Political operatives use deepfakes to damage opponents. Scammers target elderly relatives with fake family emergency videos. Corporate competitors spread false information about each other's products. The speed of creation and distribution outpaces our ability to fact-check. By the time verification happens, false content has already shaped public opinion. It's like trying to stop a wildfire with a garden hose.

When Fiction Becomes "Fact"

Here's what makes this particularly dangerous: social media algorithms don't care about truth. They care about engagement. Shocking, emotional content—whether real or fake—gets amplified faster than measured, accurate reporting. I've watched false stories race around the internet while corrections crawl behind them. The psychological impact is devastating. People feel foolish when they discover they've shared fake content, leading some to stop sharing altogether. News organizations face an impossible choice: publish quickly to stay relevant, or verify thoroughly and risk being scooped by less scrupulous competitors. The pressure favors speed over accuracy, creating more opportunities for false information to slip through.

Challenges in Content Verification

The Technical Arms Race

Verification technology fights a losing battle against creation technology. Every advance in detection triggers improvements in generation. It's like upgrading your car's security system only to find thieves have already adapted to it. Current detection methods work well against amateur deepfakes but struggle with sophisticated ones. The processing power needed for thorough verification exceeds what most newsrooms can afford. Many outlets rely on visual inspection, which fails against high-quality synthetic content. The time factor makes everything worse. Breaking news demands immediate publication, but proper verification takes hours or days. Media organizations must choose between speed and certainty, often choosing speed.

Scaling the Mountain of Content

Consider the volume: millions of images and videos get uploaded every hour. Manual verification is impossible at this scale. Automated systems help but generate false positives that waste human reviewer time. Different platforms use different standards, creating a fragmented verification landscape. Content that passes screening on one site might fail on another. This inconsistency confuses users and creates loopholes for malicious actors. The expertise gap is real. Many media professionals lack training in synthetic media detection. They rely on instinct and experience that doesn't apply to AI-generated content. Training programs exist but can't keep pace with technological changes.

Legal and Ethical Dilemmas

Laws That Can't Keep Up

Our legal system moves at the speed of legislation while technology moves at the speed of code. Laws written for traditional media don't address synthetic content adequately. Proving intent becomes nearly impossible when anyone can claim their deepfake was "just a joke." International jurisdiction issues complicate enforcement. Content created in one country, hosted in another, and distributed globally challenges traditional legal frameworks. Bad actors exploit these gaps routinely. Courts struggle with AI-generated evidence. How do you authenticate a video when video itself can be artificially created? Legal professionals need new expertise in digital forensics and synthetic media detection.

The Moral Maze

Content creators face ethical questions that didn't exist five years ago. When does enhancement become deception? If you use AI to improve lighting in a news photo, are you manipulating reality? The consent issue is complex. Using someone's likeness in synthetic media raises privacy concerns that existing frameworks don't address. What rights do people have over their digital selves? Educational institutions debate whether to teach deepfake creation. They want students to understand the technology but worry about enabling misuse. It's like teaching lock-picking to security students—necessary but risky.

Real-World Examples of Deepfakes in Media

Fake Image of Pentagon Explosion

In May 2023, a fake image showing an explosion at the Pentagon spread across social media faster than wildfire. The AI-generated photo looked convincing enough that news outlets initially treated it as legitimate breaking news. The stock market reacted immediately. Defense contractor stocks dropped before anyone could verify the image's authenticity. Trading algorithms responded to social media chatter, turning fake news into real financial consequences within minutes. Recovery was swift once verification occurred, but the damage was done. The incident proved that synthetic media could trigger real-world events with serious economic implications. It also highlighted how quickly false information travels through legitimate news channels during crisis situations.

Pope Francis AI Puffer Coat

Images of Pope Francis wearing a trendy white puffer coat went viral in March 2023, fooling millions of viewers worldwide. The synthetic images looked professionally photographed and entirely believable to casual observers scrolling through their feeds. Major news outlets shared the images before AI detection tools confirmed their artificial nature. The incident became a teaching moment about deepfake capabilities and public awareness. Many people encountered convincing AI-generated content for the first time through these images. The Pope Francis deepfake succeeded because it was unexpected but plausible. It played on our assumptions about what religious leaders might wear privately. The incident sparked conversations about labeling requirements for synthetic media across all platforms.

Solutions to Preserve Media Authenticity

Advanced Detection Technologies

Researchers are developing increasingly sophisticated tools to identify synthetic content. These systems analyze everything from pixel-level inconsistencies to behavioral patterns that humans can't detect. Machine learning algorithms learn to spot the "tells" that reveal artificial generation. Computer vision systems examine lighting inconsistencies and facial feature irregularities. Audio analysis tools check for frequency patterns that indicate synthetic speech. The challenge is staying ahead of creation technology. Detection developers must anticipate new generation techniques before they become widespread. It's like playing chess against an opponent who keeps changing the rules.

Multi-Modal Detection Approaches

Single-method detection isn't enough anymore. Comprehensive verification requires analyzing visual, audio, and metadata elements simultaneously. This approach reduces false positives and catches sophisticated fakes that fool individual detection methods. Visual analysis examines lighting, shadows, and facial movements. Audio examination focuses on speech patterns and background consistency. Metadata investigation looks at creation timestamps and device information. Combining multiple detection methods creates a more complete picture of content authenticity. However, it also requires more computational resources and technical expertise than many organizations possess.

Authentication Standards

C2PA Content Credentials represent the industry's attempt to create universal authentication standards. These digital certificates provide cryptographic proof of content creation and editing history. Digital watermarking embeds invisible markers that survive compression and format changes. Blockchain systems create unchangeable records of content provenance. These technologies work together to build comprehensive authentication frameworks. The main challenge is adoption. Standards only work when everyone uses them. Getting every camera manufacturer, social media platform, and news organization to implement the same authentication system requires unprecedented cooperation.

Regulatory Measures

Governments worldwide are developing new frameworks to address deepfake-related crimes. These laws must balance innovation protection with harm prevention—a delicate balancing act. Some jurisdictions require platforms to label AI-generated content. Others mandate rapid response systems for reported synthetic media. International cooperation agreements help track and prosecute cross-border deepfake crimes. The regulatory approach varies significantly between countries. What's illegal in one jurisdiction might be protected speech in another. This patchwork of laws creates enforcement challenges and safe havens for malicious actors.

Integration of Verifiable AI

The solution isn't eliminating AI from content creation—it's making AI use transparent and verifiable. Legitimate AI applications can enhance storytelling and improve production quality when used responsibly. Content creators are learning to disclose AI usage appropriately. Some outlets label AI-enhanced content clearly. Others provide detailed information about what synthetic elements they've included and why. Verification systems are becoming sophisticated enough to distinguish between harmful deepfakes and legitimate AI-assisted content. This nuanced approach preserves creative freedom while protecting against deception.

Conclusion

The battle for media authenticity isn't just about technology—it's about trust, truth, and the future of human communication. Deepfakes have fundamentally changed the game, but they haven't made truth impossible to find. Solutions require cooperation between creators, platforms, regulators, and audiences. Technology provides tools, but people must choose to use them. Education builds awareness, but individuals must apply that knowledge. The stakes couldn't be higher. In a world where seeing is no longer believing, we must build new ways to distinguish truth from fiction. The alternative—a post-truth society where nothing can be verified—is too dangerous to accept. Success depends on our collective commitment to authenticity over convenience, truth over sensation, and trust over suspicion. The future of media authenticity rests in our hands.